Difference between revisions of "AUDIBLE"

| Line 12: | Line 12: | ||

Artificial auditory system that gives a robot the ability to locate and track sounds, as well as the possibility of separating simultaneous sound sources and recognising simultaneous speech. We demonstrate that it is possible to implement these capabilities using an array of microphones, without trying to imitate the human auditory system. The sound source localisation and tracking algorithm uses a steered beamformer to locate sources, which are then tracked using a multi-source particle filter. Separation of simultaneous sound source is achieved using a variant of the Geometric Source Separation (GSS) algorithm, combined with a multi-source post-filter that further reduces noise, interference and reverberation. Speech recognition is performed on separated sources, either directly or by using Missing Feature Theory (MFT) to estimate the reliability of the speech features. The results obtained show that it is possible to track up to four simultaneous sound sources, even in noisy and reverberant environments. Real-time control of the robot following a sound source is also demonstrated. The sound source separation approach we propose is able to achieve a 13.7 dB improvement in signal-to-noise ratio compared to a single microphone when three speakers are present. In these conditions, the system demonstrates more than 80% accuracy on digit recognition, higher than most human listeners could obtain in our evaluation when recognising only one of these sources. All these new capabilities make it possible for humans to interact more naturally with a mobile robot in real life settings. | Artificial auditory system that gives a robot the ability to locate and track sounds, as well as the possibility of separating simultaneous sound sources and recognising simultaneous speech. We demonstrate that it is possible to implement these capabilities using an array of microphones, without trying to imitate the human auditory system. The sound source localisation and tracking algorithm uses a steered beamformer to locate sources, which are then tracked using a multi-source particle filter. Separation of simultaneous sound source is achieved using a variant of the Geometric Source Separation (GSS) algorithm, combined with a multi-source post-filter that further reduces noise, interference and reverberation. Speech recognition is performed on separated sources, either directly or by using Missing Feature Theory (MFT) to estimate the reliability of the speech features. The results obtained show that it is possible to track up to four simultaneous sound sources, even in noisy and reverberant environments. Real-time control of the robot following a sound source is also demonstrated. The sound source separation approach we propose is able to achieve a 13.7 dB improvement in signal-to-noise ratio compared to a single microphone when three speakers are present. In these conditions, the system demonstrates more than 80% accuracy on digit recognition, higher than most human listeners could obtain in our evaluation when recognising only one of these sources. All these new capabilities make it possible for humans to interact more naturally with a mobile robot in real life settings. | ||

---- | ---- | ||

| − | |||

| − | |||

| − | |||

| − | |||

= Équipe / Team = | = Équipe / Team = | ||

*François Grondin | *François Grondin | ||

Revision as of 13:16, 12 April 2011

AUDIBLE - Artificial audition for mobile robots

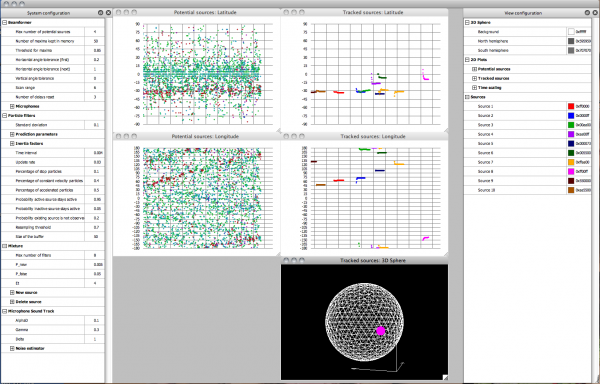

Artificial auditory system that gives a robot the ability to locate and track sounds, as well as the possibility of separating simultaneous sound sources and recognising simultaneous speech. We demonstrate that it is possible to implement these capabilities using an array of microphones, without trying to imitate the human auditory system. The sound source localisation and tracking algorithm uses a steered beamformer to locate sources, which are then tracked using a multi-source particle filter. Separation of simultaneous sound source is achieved using a variant of the Geometric Source Separation (GSS) algorithm, combined with a multi-source post-filter that further reduces noise, interference and reverberation. Speech recognition is performed on separated sources, either directly or by using Missing Feature Theory (MFT) to estimate the reliability of the speech features. The results obtained show that it is possible to track up to four simultaneous sound sources, even in noisy and reverberant environments. Real-time control of the robot following a sound source is also demonstrated. The sound source separation approach we propose is able to achieve a 13.7 dB improvement in signal-to-noise ratio compared to a single microphone when three speakers are present. In these conditions, the system demonstrates more than 80% accuracy on digit recognition, higher than most human listeners could obtain in our evaluation when recognising only one of these sources. All these new capabilities make it possible for humans to interact more naturally with a mobile robot in real life settings.

Équipe / Team

- François Grondin

- Jean-Marc Valin

- François Michaud

- Jean Rouat

- Simon Brière

- Dominic Létourneau

Nouvelles / News

|

December 2009 : Congratulations to Ben Passow (PhD student) and Mario Gongora's that won the Annual Machine Intelligence Competition run by the British Computer Society with their entry called 'Fly By Ear' . This is the second year in a row a team from the the CCI has won this competition. They are using the ManyEars package for sound source localization. |

Video

Installation

Separation

- Résultats graphiques / Graphical results

- Entrée: 3 personnes récitant 4 chiffres en séquence / Inputs: 3 persons saying 4 digits

- Sortie séparée 1 / Separated sound source 1

- Sortie séparée 2 / Separated sound source 2

- Sortie séparée 3 / Separated sound source 3

- Results DSP (digit) March 2007

- Results DSP (voices) March 2007

Publications

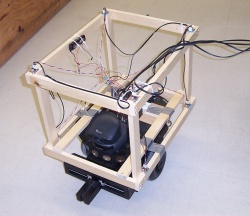

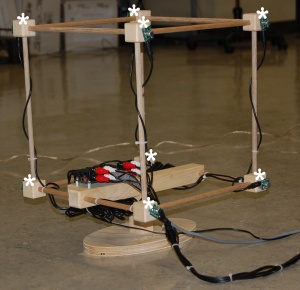

- Brière, S., Valin, J.-M., Michaud, F., Létourneau, D. (2008) “Embedded auditory system for small mobile robots,” to be presented at IEEE International Conference on Robotics and Automation. (pdf)

- Valin, J.-M., Yamamoto, S., Rouat, J., Michaud, F., Nakadai, K., Okuno, H. (2007), “Robust recognition of simultaneous speech by a mobile robot,” IEEE Transactions on Robotics, 23(4):742-752. (pdf)

- Valin, J.-M., Michaud, F., Rouat, J. (2007), “Robust localization and tracking of simultaneous moving sound sources using beamforming and particle filtering,” Robotics and Autonomous Systems Journal, 55: 216-228. (pdf)

- Brière, S., Létourneau, D., Fréchette, M., Valin, J.-M., Michaud, F. (2006), “Embedded and integration audition for a mobile robot,” Proceedings AAAI Fall Symposium Workshop Aurally Informed Performance: Integrating Machine Listening and Auditory Presentation in Robotic Systems, FS-06-01, 6-10. (pdf)

- Valin, J.-M., Michaud, F., Rouat, J., (2006), “Robust 3D localization and tracking of sound sources using beamforming and particle filtering”, Proceedings International Conference on Acoustics, Speech, and Signal Processing, 841-844.

- Valin, J.-M.. (2005), "Auditory system for a mobile robot", Ph.D. Thesis, Department of Electrical Engineering and Computer Engineering, Université de Sherbrooke, August. (pdf)

- Yamamoto, S., Nakadai, K., Valin, J.M., Rouat, J., Michaud, F., Komatani, K., Ogata, T., Okuno, H. (2005), “Making a robot recognize three simultaneous sentences in real-time,” Proceedings IEEE/RSJ International Conference on Intelligent Robots and Systems, 897-902. (pdf)

- Yamamoto, S., Valin, J.M., Nakadai, K., Rouat, J., Michaud, F., Ogata, T., Okuno, H. (2005), “Enhanced robot speech recognition based on microphone array source separation and missing feature theory,” IEEE International Conference on Robotics and Automation, 1489-1494.

- Valin, J.-M., Rouat, J., Michaud, F. (2004), "Enhanced robot audition based on microphone array source separation with post-filter", Proceedings IEEE/RSJ International Conference on Robots and Intelligent Systems, 2123-2128. (pdf)

- Valin, J.-M., Michaud, F., Hadjou, B., Rouat, J. (2004), "Localization of simultaneous moving sound sources for mobile robot using a frequency-domain steered beamformer approach", Proceedings IEEE International Conference on Robotics and Automation, 1033-1038. (pdf)

- Valin, J.-M., Rouat, J., Michaud, F. (2004), "Microphone array post-filter for separation of simultaneous non-stationary sources", accepted ICASSP. (pdf)

- Valin, J.-M., Michaud, F., Létourneau, D., Rouat, J. (2003), "Robust sound source localization using a microphone array on a mobile robot", Proceedings IEEE/RSJ International Conference on Intelligent Robots and Systems, 1228-1233. (pdf)

- Robust localization and tracking of simultaneous moving sound sources using beamforming and particle filtering. Valin, J.M., Michaud, F., Rouat, J. Demande de brevet déposée / Patent pending, 27 avril 2005, US 11/116,117.